Blog

Managing Linux logs can be a goldmine of information, but extracting actionable insights requires the right tools and techniques. From command-line utilities to advanced log analysis tools, the process is essential for any system administrator to unravel critical system performance indicators and security incidents.

In this segment, we’ll delve into a set of powerful tools and explore how sophisticated log management solutions, like SolarWinds® Loggly®, can automate and enhance the log analysis process.

The Power of grep for Log Analysis

grep, a command-line tool, is the starting point for log analysis. This utility can quickly search for text patterns in log files, enabling simple text-based searches. For instance, to search for specific phrases like “user hoover” in the authentication log, use the following command:

bash

$ grep "user hoover" /var/log/auth.logLeveraging the Versatility of Regular Expressions

Regular expressions (regex) offer far more advanced search patterns than simple text-based searches. These patterns provide nuanced search criteria within log files. For example, to isolate attempts made on a particular port, a regex pattern can be employed to extract specific instances:

bash

$ grep -P "(?<=port\s)4792" /var/log/auth.logGaining Context with Surround Search

Surround search provides a broader perspective by retrieving lines before and after a match, providing essential context for each event. For instance, it can reveal details leading up to or following a specific event, facilitating a deeper understanding of the context:

bash

$ grep -B 3 -A 2 'Invalid user' /var/log/auth.logReal-Time Insight with the Tail Command

tail, another command-line tool, displays the latest changes in log files in real-time, allowing real-time monitoring of ongoing system processes. Using tail -n fetches the last N lines of a file, which can be helpful for live monitoring:

bash

$ tail -n 5 /var/log/messagesIntroduction to cut and Awk for Advanced Parsing

The cut command parses fields from delimited logs, while Awk provides a complete scripting language for filtering and parsing fields more effectively. Parsing log fields is critical for more accurate log analysis:

bash

$ grep "authentication failure" /var/log/auth.log | cut -d '=' -f 8

bash

$ awk '/sshd.*invalid user/ { print $9 }' /var/log/auth.logStreamlining Log Analysis with Log Management Systems

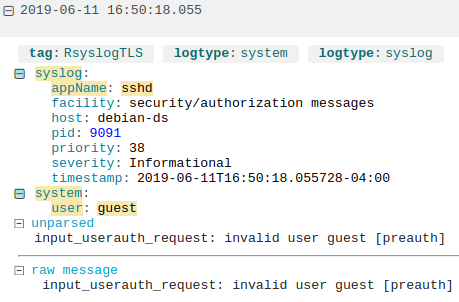

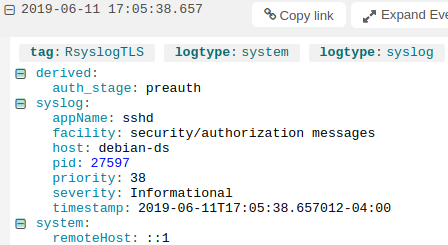

Log management systems, such as SolarWinds Papertrail and Loggly, streamline log analysis by automatically parsing common log formats and indexing fields. These platforms provide live tailing, quick searches, and indexed log fields to handle large log volumes: